|

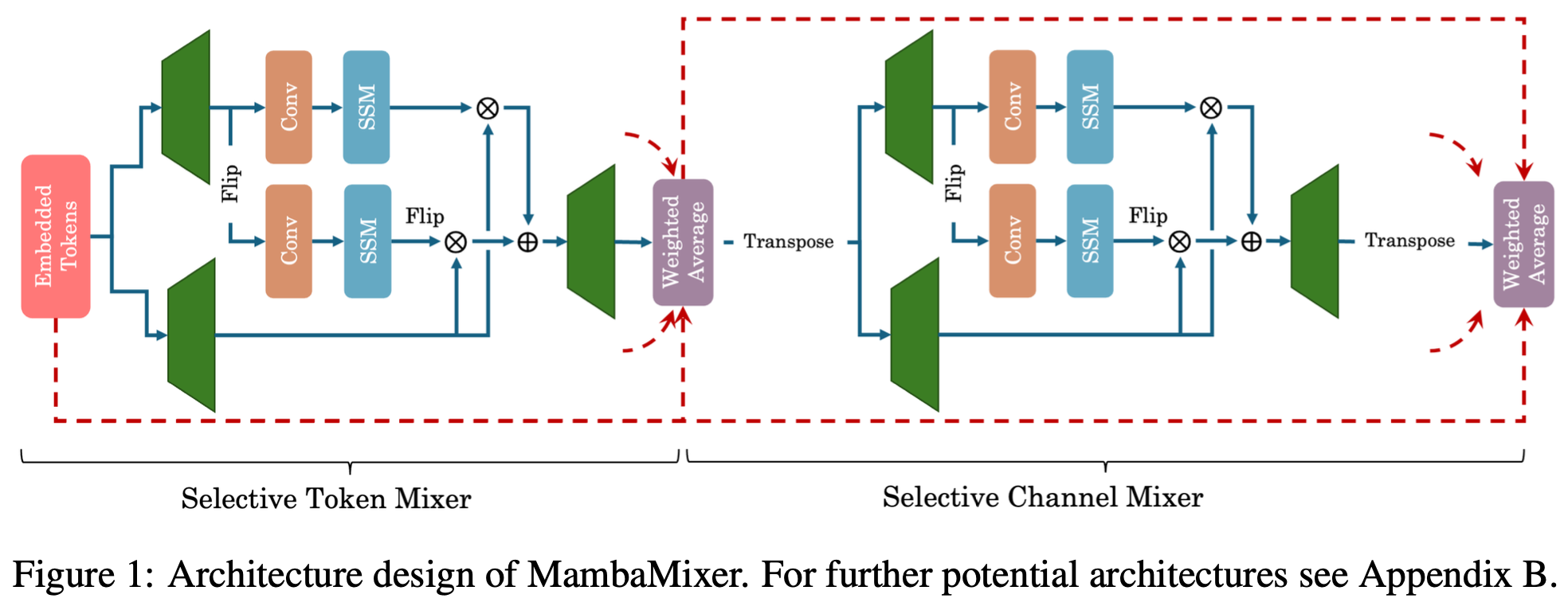

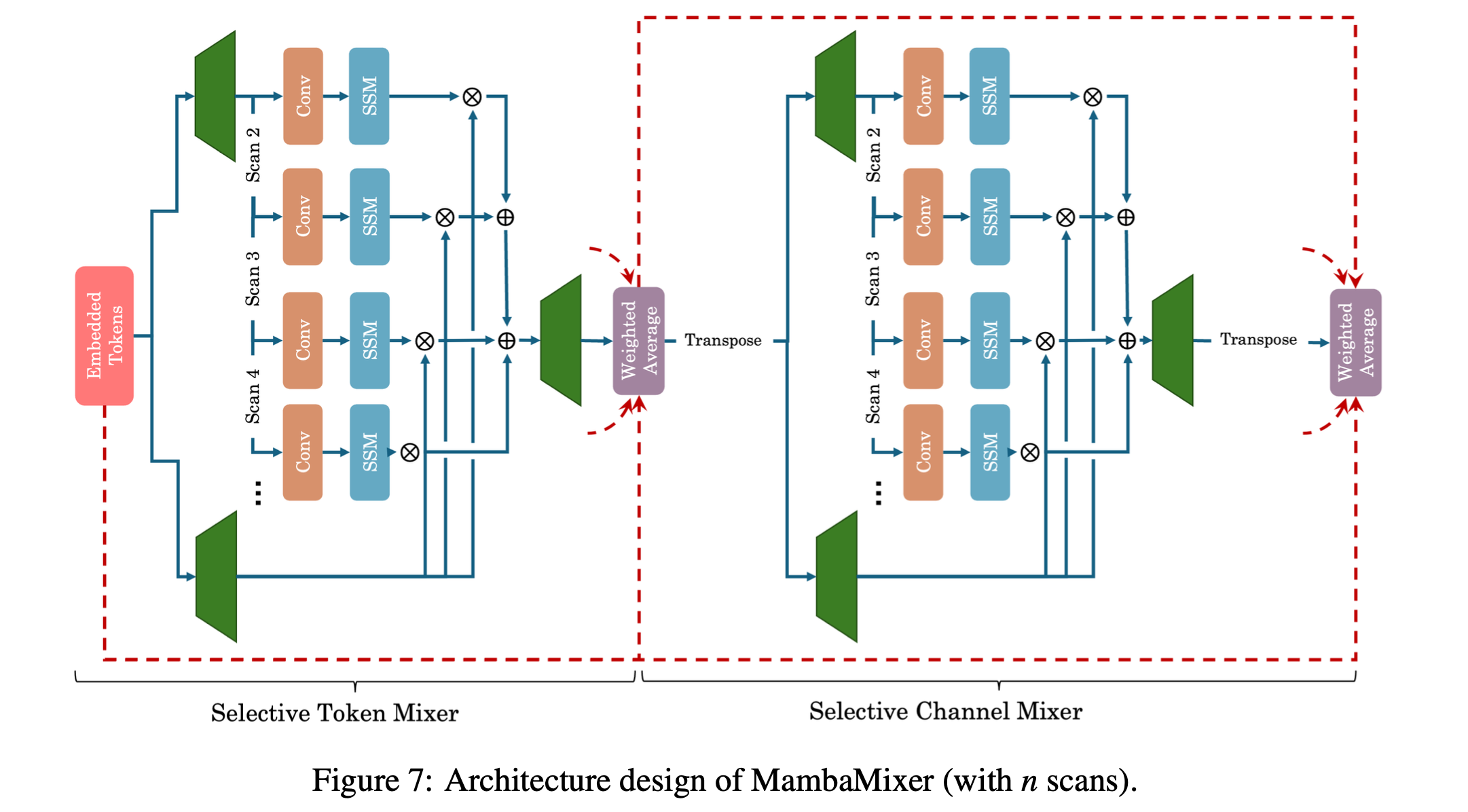

Recent advances in deep learning have mainly relied on Transformers due to their data dependency and ability to learn at scale. The attention module in these architectures, however, exhibit quadratic time and space in input size, limiting their scalability for long-sequence modeling. Despite recent attempts to design efficient and effective architecture backbone for multi-dimensional data, such as images and multivariate time series, existing models are either data independent, or fail to allow inter- and intra-dimension communication. Recently, State Space Models (SSMs), and more specifically Selective State Space Models (S6), with efficient hardware-aware implementation, have shown promising potential for long sequence modeling. Motivated by the recent success of SSMs, we present MambaMixer block, a new architecture with data dependent weights that uses a dual selection mechanism across tokens and channels–called Selective Token and Channel Mixer. MambaMixer further connects the sequential selective mixers using a weighted averaging mechanism, allowing layers to have direct access to different layers' input and output. As a proof of concept, we design Vision MambaMixer (ViM2) and Time Series MambaMixer (TSM2) architectures based on MambaMixer block and explore their performance in various vision and time series forecasting tasks. Our results underline the importance of selectively mixing across both tokens and channels. In ImageNet classification, object detection, and semantic segmentation tasks, ViM2 achieves competitive performance with well-established vision models, i.e., ViT, MLP-Mixer, ConvMixer, and outperforms SSM-based vision models, i.e., ViM and VMamba. In time series forecasting, TSM2, an attention and MLP-free architecture, achieves outstanding performance compared to state-of-the-art methods while demonstrating significantly improved computational cost. These results show that while Transformers, cross-channel attention, and cross-channel MLPs are sufficient for good performance in practice, neither is necessary.

|